Self-Hosted AI Chatbots are the most effective way for modern enterprises to scale their digital operations without the burden of unpredictable monthly overheads. In the race to adopt Artificial Intelligence, many businesses are quickly hitting a wall: the escalating, unpredictable costs of cloud-based AI services and API calls. While the initial appeal of “pay-as-you-go” API access is clear, scaling an AI solution that relies on third-party tokens can lead to massive, unexpected monthly bills that quickly erode your ROI.

This is where Self-Hosted AI Chatbots offer a transformative alternative. For CTOs and Operations Managers focused on sustainable growth and predictable budgets, the case for owning your AI infrastructure isn’t just about control—it’s about dramatically slashing long-term operational costs and unlocking true, unlimited scalability.

The Hidden Cost Traps of Cloud AI APIs

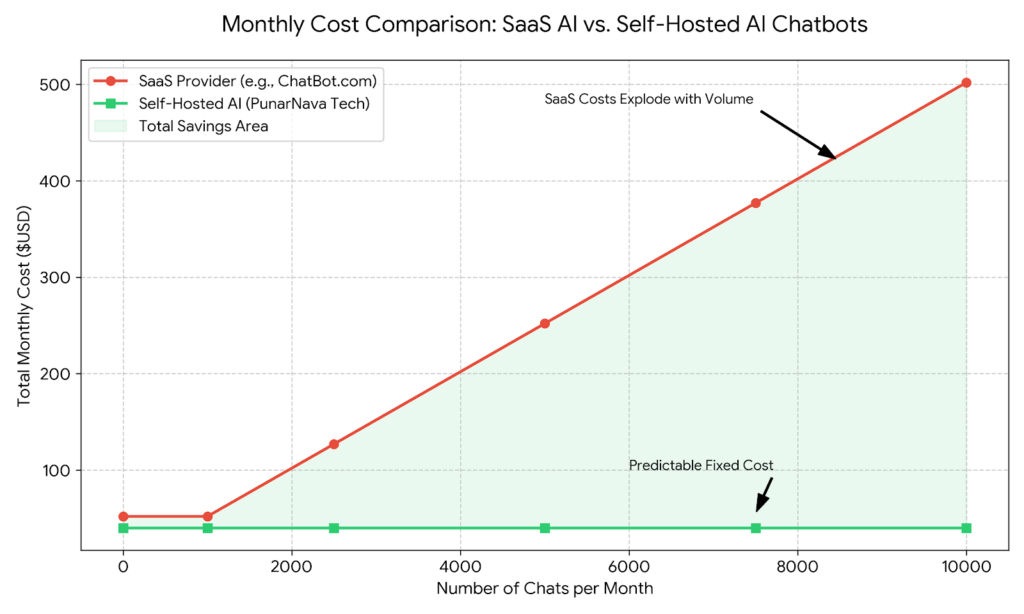

When you use a cloud-based AI, you’re essentially renting intelligence. This comes with several financial pitfalls that can stifle a growing company. First, there are the variable token fees. Every interaction, every query, and every generated response incurs a fee. As your customer base grows, your expenses grow linearly, which is the opposite of how a scalable business should operate. According to recent industry reports on AI Total Cost of Ownership (TCO), the breakeven point for on-premise AI infrastructure can often be reached in less than a year.

Secondly, vendor lock-in becomes a major financial risk. Once your entire workflow is integrated into a specific provider’s API, you are at the mercy of their pricing updates. If they decide to double their prices, you are forced to pay or face a massive technical migration. Self-Hosted AI Chatbots remove this risk entirely by giving you the keys to the engine.

7 Ways Self-Hosted AI Chatbots Drive Unbeatable Cost-Efficiency

Embracing Self-Hosted AI Chatbots shifts your investment from recurring operational expenses (OpEx) to a strategic, fixed capital asset (CapEx).

- Predictable Spending: Your primary costs are infrastructure you own. Once set up, the cost per interaction plummets to near zero.

- Eliminate Token Fees: No more paying per prompt. Your AI can process millions of interactions for free once the server is running.

- Unlimited Scalability: Scale your AI processing power by adding internal resources, not by paying higher unit costs to a middleman.

- Optimal Resource Utilization: Size your hardware precisely to your needs. You aren’t paying for the “idle time” of a cloud provider’s massive data center.

- Long-Term ROI: The initial investment in Self-Hosted AI Chatbots pays dividends over time. You build a valuable asset that stays on your balance sheet.

- Full Customization: Fine-tune models using open-source architectures like Meta’s Llama without incurring additional “training” API costs.

- Reduced Legal Risk: As we discussed in our previous post on AI data privacy, avoiding a single data breach can save a company millions in potential legal fees and fines.

Strategic Ownership of AI Infrastructure

By moving away from the “rental” model of the cloud, companies can finally achieve a tech stack that grows with them. Self-Hosted AI Chatbots allow you to reallocate your budget from “API maintenance” to “Product Innovation.” This shift is what separates market followers from market leaders who own their technology.

When you deploy Self-Hosted AI Chatbots, you are building intellectual property that stays within your company’s valuation. Unlike a subscription, which is a pure cost, a self-hosted model is an asset that grows more valuable as you feed it more internal data and refine its responses. You are effectively building a custom “brain” for your company that you own forever.

Technical Sustainability and the Future

In the long run, the efficiency of Self-Hosted AI Chatbots is also linked to energy and resource management. By running models locally or on private clouds, you can optimize for specific tasks rather than running massive, general-purpose models for every tiny query. This reduces the computational load and, by extension, the electricity and cooling costs associated with high-scale AI operations.

Conclusion: Invest in Ownership Today

The decision to implement Self-Hosted AI Chatbots is a strategic shift. It’s about building an AI future that is not only powerful and private but also financially sustainable. For CTOs and business owners, this means clearer budgets, greater control, and the ability to scale confidently without the fear of the next monthly invoice.